This Week in AI: To the Moon(shot)

Plus: This is not the podcast voice you think it is...

PROMPTS REPORT AND DEREK BERES MAY 30, 2023

Interstellar therapeutics

“The art of medicine is long, Hippocrates tells us, and life is short; opportunity fleeting; the experiment perilous; judgment flawed,” — Siddhartha Mukherjee, Emperor of All Maladies.

🌕 Despite generations of speculation, treatments, false starts, and Biden’s “moonshot,” cancer remains an integral part of our biology. We’ll all live with cancer cells our entire lives. The hope is they remain unactivated.

Yet 50% of us will get cancer. I’m a survivor, and, given family genetics, will likely battle it again.

💻 While detection systems and therapeutics have dramatically improved, AI is providing some of the most promising solutions to date—from astrophysics.

- “The VIPER algorithm…starts with what we can measure about a tumor but then delves beneath the surface to infer the hidden layer of master regulator proteins that drive the disease state of cancer cells. This feat was previously considered beyond our technological grasp. By analyzing gene expression profiles, it can decipher the unique pattern of master regulators in each tumor and the existing drugs that can deactivate them.”

🪐 This approach mimics how astrophysicists seek black holes and exoplanets. The cancer researchers flipped the technique to search for microscopic level hints instead of telescopic-strength big pictures.

- “Our experiment showed that drugs prioritized by the algorithm predicted, with 91% accuracy, the ability to achieve disease control in human tumors transplanted into mice. Notably, drugs favored by the algorithm significantly outperformed cancer drugs that were predicted not to work, which were used as controls.”

As with all research, more is needed. But given the 250 FDA-approved drugs and 200 types of cancer, resulting in tens of thousands of possible clinical approaches, this could be one giant leap toward the moon.

Flight of the founding fathers

🤖 OpenAI CEO Sam Altman recently implored Congress to regulate his industry, though not everyone believes it’s a good-faith argument. And it ignored a more important presentation going on that day three flights up in the same building.

- “It got maybe 1/100th of the attention. It was looking at all the ways in which AI is being used across the board to actually impact our lives in ways that have been going on for years, and that people still aren't talking enough about.”

The thrust of that argument revolved around protecting employee rights and not rushing to cut costs by stripping away the workforce. Suresh Venkatasubramanian, Brown University’s director of the Center for Tech Responsibility, worries the media fell for a masterful marketing strategy by Altman. As he frames it,

- “His company is the one that put this out there. He doesn’t get to opine on the dangers of AI. Let’s not pretend that he's the person we should be listening to on how to regulate… We don’t ask arsonists to be in charge of the fire department.”

📝 Venkatasubramanian co-authored the blueprint for an “AI Bill of Rights,” which calls for safety measures, protections against discrimination, data privacy, and protecting workers.

OpenAI also published its “Governance of superintelligence,” which, while asking companies to act responsibly, is short on meaning and long on aspiration.

- “We believe [AI’s] going to lead to a much better world than what we can imagine today (we are already seeing early examples of this in areas like education, creative work, and personal productivity)... Second, we believe it would be unintuitively risky and difficult to stop the creation of superintelligence. Because the upsides are so tremendous, the cost to build it decreases each year, the number of actors building it is rapidly increasing, and it’s inherently part of the technological path we are on, stopping it would require something like a global surveillance regime, and even that isn’t guaranteed to work. So we have to get it right.”

What “right” means depends on who’s making the pitch.

Or writing the code.

In alignment

📘 CNBC has created a short and useful dictionary of AI terms to everyone up on the basics. A few examples:

- Alignment is the practice of tweaking an AI model so that it produces the outputs its creators desired. In the short term, alignment refers to the practice of building software and content moderation. But it can also refer to the much larger and still theoretical task of ensuring that any AGI would be friendly towards humanity.

- Emergent behavior is the technical way of saying that some AI models show abilities that weren’t initially intended. It can also describe surprising results from AI tools being deployed widely to the public.

- Stochastic Parrot — An important analogy for large language models that emphasizes that while sophisticated AI models can produce realistic seeming text, that the software doesn’t have an understanding of the concepts behind the language, like a parrot.

Dead ringer

🎙️ With so much emphasis on replicating singers, there’s another audience that’s about to be reproduced by AI: podcasters.

That’s the news from sportswriter Bill Simmons, who founded the podcast network, The Ringer, in 2016.

- In 2020, Spotify snatched The Ringer for $200 million.

🏀 While Spotify has been taking down AI-generated songs, it appears the platform is preparing an AI-generated ad reading service. So if you hear Simmons selling the NBA League Pass, that might not be the voice you think it is.

Only, it is. Kind of.

- “There is going to be a way to use my voice for the ads. You have to obviously give the approval for the voice, but it opens up, from an advertising standpoint, all these different great possibilities.”

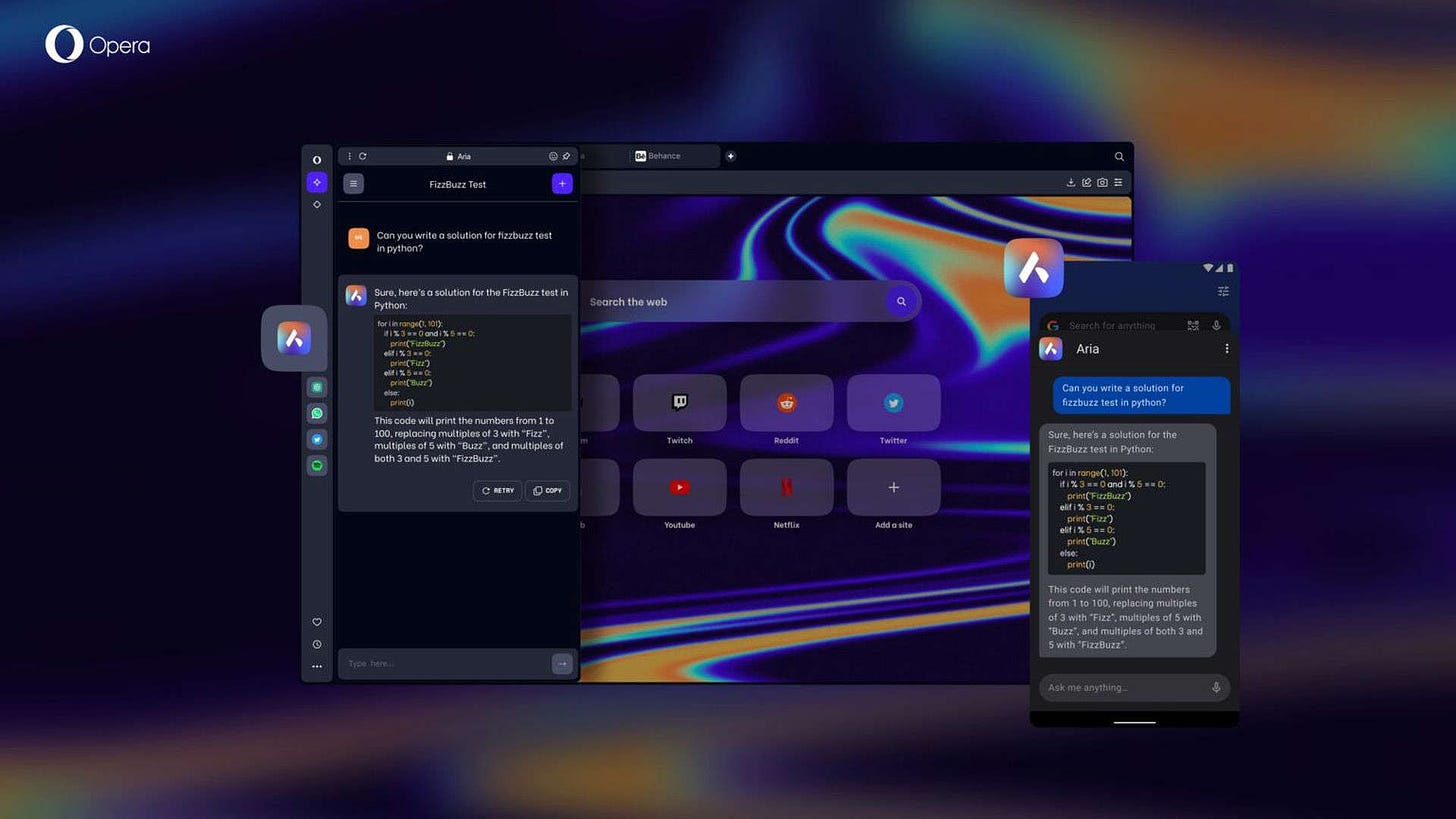

AI Tool of the Week: Aria

👩🏼🎤 Opera has unveiled its latest innovation in web browsing experience: Aria, an AI-powered sidebar incorporated into its browser. According to a blog post on the Opera website, Aria utilizes OpenAI’s ChatGPT to deliver an array of capabilities such as generating text, writing code, and answering questions.

Member discussion