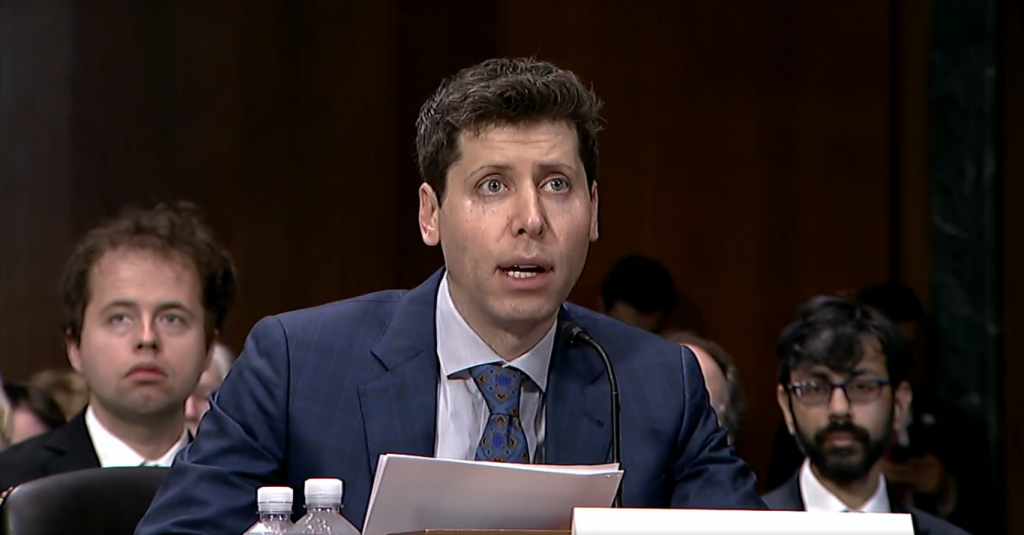

OpenAI CEO Sam Altman Predicts Super Intelligence Surpassing Human Expertise in a Decade; Calls for Regulation and Preparation

OpenAI CEO Sam Altman, along with co-executives Greg Brockman and Ilya Sutskever, made a bold prediction on Monday in a blog post, stating that artificial intelligence (AI) will be likely to exceed the "expert skill level" across most fields within the next decade. The trio also declared it unfeasible to halt the emergence of "super intelligence."

"Super intelligence will be more powerful than other technologies humanity has had to contend with in the past, both in terms of potential upsides and downsides," the executives wrote. This prediction and cautionary note come mere days after Altman warned a Senate committee about the potential pitfalls of AI.

The rapid advancements of AI tools such as OpenAI's ChatGPT and Google's Bard have sparked debates and concerns about their impact on multiple sectors. From threatening nearly one in five jobs to changing the landscape of education as students increasingly lean on AI for academic assistance, these AI tools' repercussions are being felt widely.

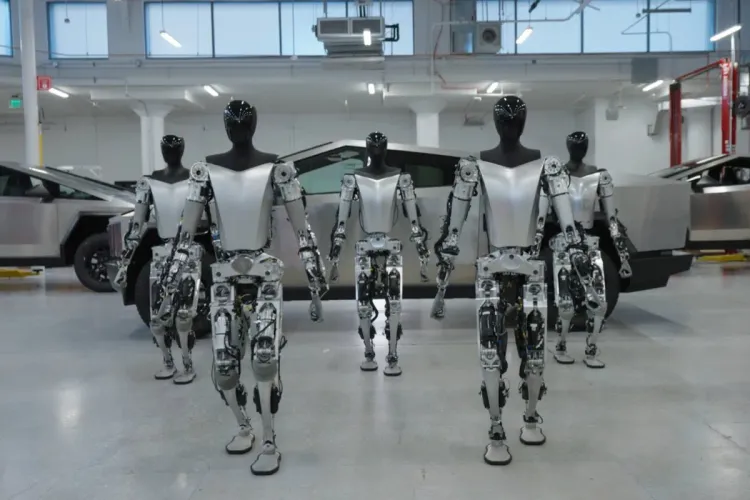

Despite the potential pitfalls, the OpenAI executives emphasized that AI's growing power could benefit humanity significantly. However, they insisted that careful regulation will be necessary to prevent harm as AI evolves into "superintelligence."

"Nuclear energy and synthetic biology are examples of technologies that posed existential risks, hence the necessity to be proactive rather than reactive," the executives wrote, drawing parallels between past disruptive technologies and AI.

"Superintelligence will require special treatment and coordination," they added, emphasizing the need for risk mitigation with current AI technologies as well.

The OpenAI leaders suggested that an agency akin to the nuclear industry's International Atomic Energy Agency might be needed to oversee superintelligence. They believe that an international authority would be beneficial in inspecting systems, requiring audits, testing for compliance with safety standards, and placing restrictions on deployment and security levels for entities surpassing certain capability or resource thresholds.

Attempts to obstruct the emergence of superintelligence would prove futile, the executives wrote. According to them, superintelligence is an inevitable part of our technological trajectory, and preventing it would necessitate a global surveillance regime, which might still fall short.

"Superintelligence is coming, and we have to get it right," the executives stressed, underlining the urgency for both the technology and regulatory communities to prepare for this technological leap.

Member discussion