Microsoft Discovers Indications of Human-like Reasoning in AI

Last year, Microsoft computer scientists set their latest artificial intelligence system a task that demanded a perceptual understanding of the physical world. They asked it to devise a way to balance a book, nine eggs, a laptop, a bottle, and a nail.

The AI system astounded the researchers with its innovative solution: place the eggs on the book in three spaced-out rows, then set the laptop on top of the eggs, keyboard facing upwards. This would provide a stable platform for further stacking.

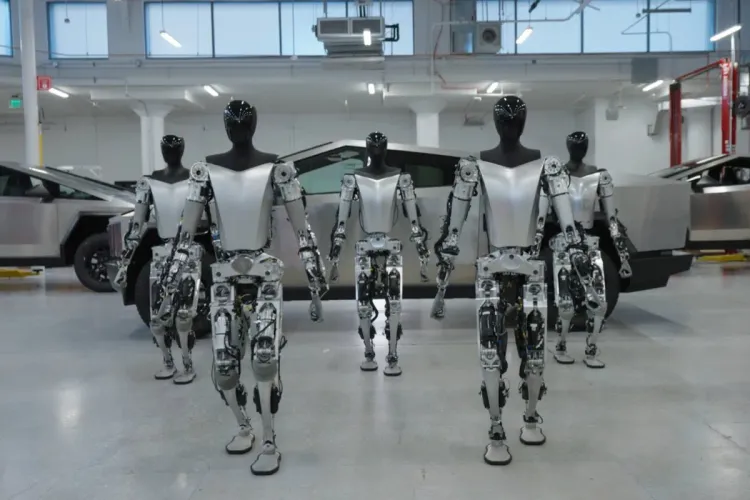

This unexpected solution led the researchers to question if they were witnessing a fresh form of intelligence. In March, they released a 155-page paper titled “Sparks of Artificial General Intelligence,” arguing that the AI system was progressing towards artificial general intelligence (AGI), a theoretical machine that could perform any task a human brain can.

Microsoft is the first tech giant to issue a paper making such a bold claim, igniting a fierce debate in the tech community: are we on the brink of creating human-like intelligence, or are we allowing our imaginations to get carried away?

Microsoft's controversial research paper is central to what tech developers have been pursuing and dreading for decades. The creation of a machine that functions like, or surpasses, the human brain could be revolutionary, but it also carries risks. Misinterpretations of AGI could also potentially damage a computer scientist's reputation.

But there is a growing belief within the industry that we are edging closer to a new AI system that generates human-like responses and ideas without being explicitly programmed to do so.

Microsoft has restructured its research labs to include several groups focusing on this concept, one of which will be led by Sébastien Bubeck, the lead author of the Microsoft AGI paper.

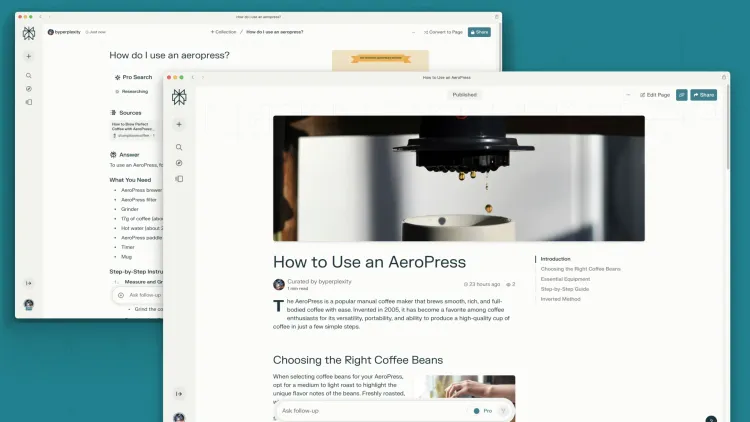

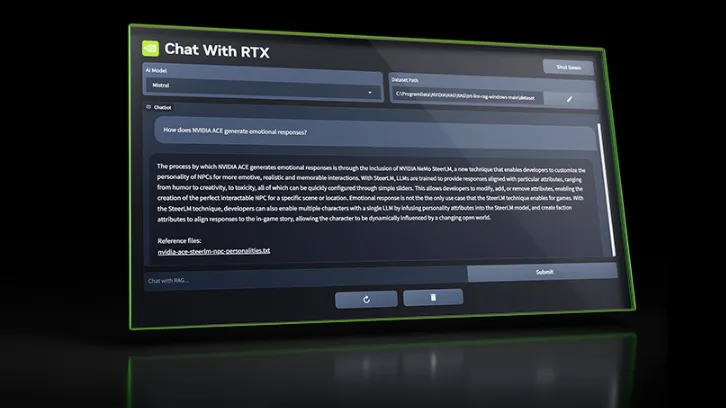

A few years ago, companies like Google, Microsoft, and OpenAI started developing large language models (LLMs) that analyze vast amounts of digital text, learning to generate their own text, such as academic papers, poetry, and computer code.

The Microsoft researchers utilized OpenAI’s GPT-4, currently the most potent of these systems, to write a rhyming mathematical proof demonstrating the infinity of prime numbers. The system's impressive output left the researchers astonished.

The system also displayed proficiency in tasks like drawing a unicorn using a programming language, assessing the risk of diabetes based on personal data, and creating a Socratic dialogue that examined the misuse and risks of LLMs. It demonstrated an understanding of diverse fields such as politics, physics, history, computer science, medicine, and philosophy.

Some AI experts, however, view the Microsoft paper as an opportunistic attempt to make grandiose claims about a technology that remains not entirely understood.

Despite the system's impressive capabilities, it occasionally exhibits limitations that are typically human-like. The behaviors of systems like GPT-4 are inconsistent, and it remains uncertain if the text they generate stems from human-like reasoning

Member discussion