AI's Realism Erodes Trust in Communication, Researchers Warn

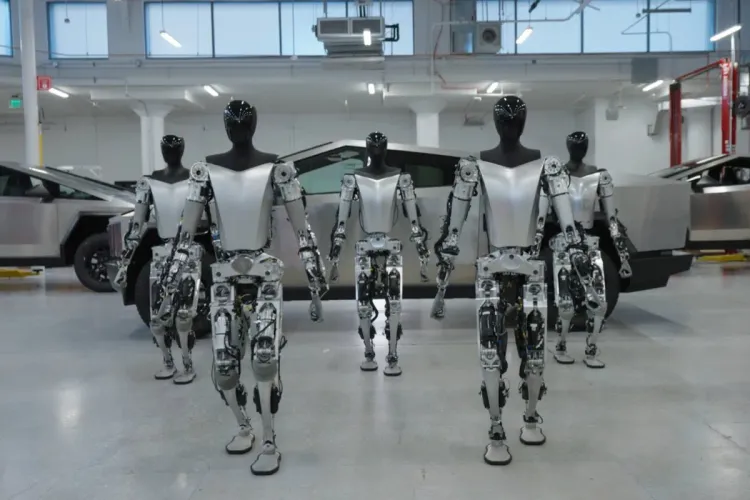

AI's increasing realism may erode our trust in communication partners, according to researchers at the University of Gothenburg. Professors Oskar Lindwall and Jonas Ivarsson explored how AI influences trust in interactions where one party could be an AI agent. They found that when unable to fully trust a conversational partner's intentions and identity, excessive suspicion arises, which can harm relationships.

The study revealed that during human-to-human interactions, some behaviors were interpreted as evidence that one of them was a robot. The researchers argue that AI's human-like voices, which create a sense of intimacy, may be problematic when it's unclear who we're communicating with. They propose developing AI with eloquent yet clearly synthetic voices to increase transparency.

The researchers analyzed data from YouTube to study different types of conversations and audience reactions. The uncertainty of whether one is talking to a human or a computer affects relationship-building and joint meaning-making aspects of communication, which may be detrimental in therapy requiring human connection.

Member discussion